In the beginning there is the relation – Pierpaolo Donati

Within the category of ‘using’ conversational agents are a range of relational possibilities which map onto human/agent interaction, human/human interactions and agent/agent interactions.

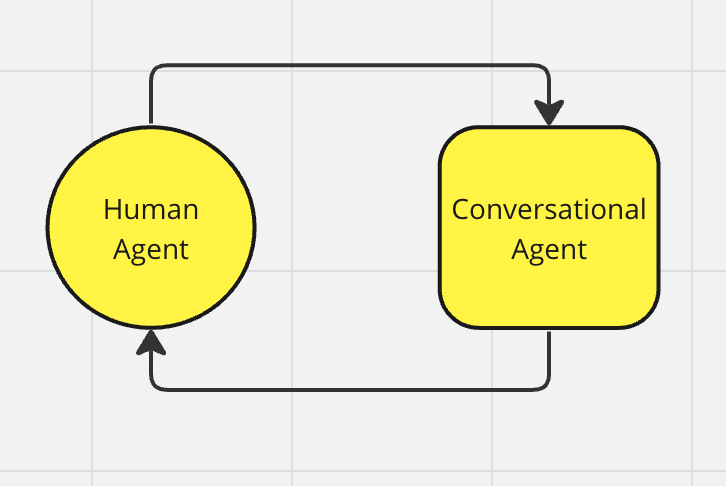

Single prompt command interaction with a conversational agent

Ongoing dialogue with a conversational agent

(up to the technical limit of the context window)

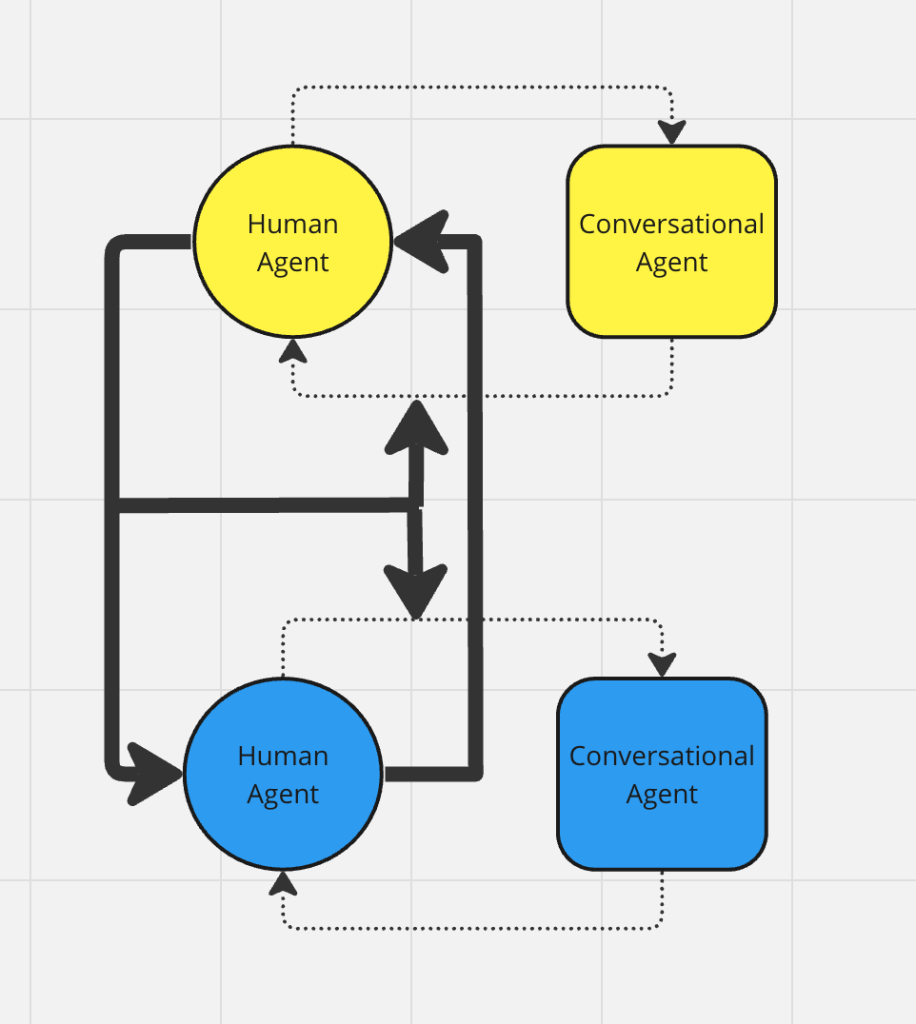

Human/human dialogue informed by parallel private dialogues with conversational agents

Human/human dialogue informed by parallel non-private dialogues with conversational agents

(i.e. the parameters of the parallel conversations become one objects amongst others of the human/human dialogue)

These are simplified representations which remain at the level of dyads and the relations between them, but these configurations can be scaled up by:

- Increasing the number of conversational agents each human agent enters into relation to, whether these are in turn dyadic relations (e.g. making the same request of a number of conversational agents), facilitated inter-agent dialogues (i.e. using the output from agent as an input to the other) or reflexive inter-agent dialogues (i.e. making the parameters of the interaction with each agent part of the reciprocal dialogues).

- Increasing the number of human agents involved in the collaboration, with each operating in a private dialogue with one/multiple conversational agents, in which case the conversational agents are (ideally) epistemic amplifiers but not epistemic participants in a full sense.

- Increasing the number of human agents involved in the collaborative parallel non-private dialogue with conversational agents, in which the parameters of these conversations are an object of a progressively more complex human/human dialogue as it is scaled up.

It could be simplified by having multiple human agents relating to a single conversational agent in a defined and ongoing way, particularly if this involves building up a collective knowledge base in the roles I describe in generative AI for academics as ‘digital scribe’ and research assistant.

What fascinates me is the possibility of relational reflexivity emerging with conversational agents, in the sense Donati describes here:

To say that ‘we are what we care about’ is not reducible to a kind of ‘resonance’ between what ego cares about and what alter cares about. There is ‘something different’ that lies in between, and this is the social relation. We need a relational reflexivity to catch it. Such reflexivity consists in the subjects orienting themselves to the reality emerging from their interactions by taking into consideration how this reality is able (has its own powers) to feed back onto the subjects (agents/ actors), since it exceeds their individual as well as their aggregate contribution to it by virtue of their personal powers. The book is about this reality.

Relational Sociology: A New Paradigm: xvi

In the current phase of technological development this relational reflexivity is limited, for economics reasons as much as technological ones (see below for Claude’s interesting response to the query of the balance between them). If the context window means memory expires at an arbitrary point in the conversation, whatever limited (statistically constituted) capacities the conversational agent has to (probabilistically) orientate itself towards the user’s goal are simply functional or dysfunctional. As the context window expands and the meta-commentary of conversational agents becomes more sophisticated, I think there is a truncated but real form of relational reflexivity which could open up here. But the more interesting and immediate question is how that capability could be inflected through the relational reflexivity of human teams using conversational agents in coordinated and purposeful ways.

The tendency for the role of the ‘user’ to individualise our conceptions of relating to web based systems (in particular) makes the relational range of possibilities here more obscure than it. might otherwise be. But this is where the really exciting possibilities open. I’ve always been sceptical of the ‘collective intelligence’ literature because it so easily slips into neoliberal doxa in its less meta-reflective instantiations. But how conversational agents can support, scaffold and expand collective intelligence is fascinating question.

There are both economic and technological factors that likely contribute to the limited context window in most chatbot systems:

Economic factors:

- Storing and accessing significantly more conversational context would increase computing costs for training and running chatbot models. This could make it economically unviable as a mass-market consumer product.

- Longer context also slows response time, which reduces engagement. Fast responses are key for consumer satisfaction and retention.

- Current context limits may reflect strategic choices to balance cost, speed and capabilities for wide adoption. Expanding context isn’t yet an economic priority.

Technological factors:

- Long-term memory and context modeling remain ongoing challenges in AI research. Handling discourse coherently over long conversational histories is difficult.

- Long context also increases the potential for hallucinated or contradictory responses that reduce coherence and accuracy.

- Curtailing context reduces computational load, allowing resources to be allocated to other aspects of the system.

- However, research is actively exploring increased memory and context capabilities using emerging techniques like knowledge graphs.

So in summary, while the economics of providing a service at scale likely influences context limits, genuine technological constraints also pose challenges for significantly expanding chatbot memory and reasoning over long conversational histories at this stage. But research aims to push these boundaries further.

Claude AI, August 12th 2023