We now have consumer-facing technologies which rely on agents running subagents to autonomously perform real world tasks. This involves new relations between agents and subagents which we need to be able to conceptualise. Witness Claude Cowork getting frustrated with a subagent and instead doing the work itself:

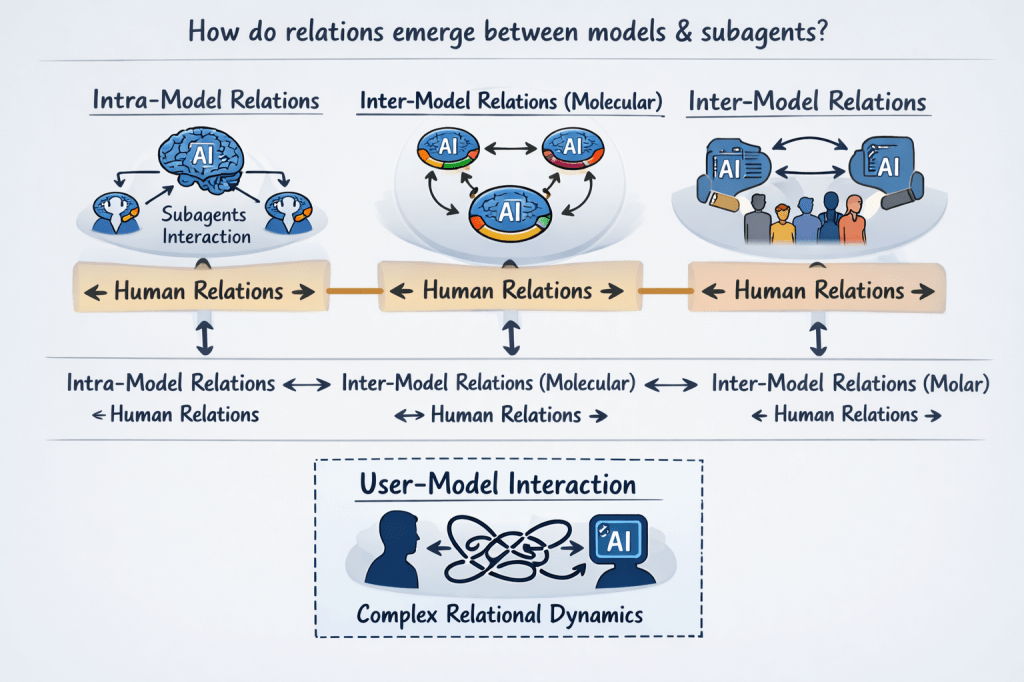

What sort of relations are emerging between models and subagents? What does this mean for user-model interaction? How are models interacting in real world settings? Are there interactions taking place between their subagents, even if it’s just bumping into each other with an overarching system? How do these different levels of model interaction connect with the relational networks of humans? So I see a few levels of relation:

- Intra-model relations

- Inter-model relations (molecular)

- Inter-model relations (molar)

And then there’s human-model relationality at each level i.e. the relations between the relations:

- Intra-model relations <—> human relations

- Inter-model relations (molecular) <–> human relations

- Inter-model relations (molar) <–> human relations

And if we take user-model interaction as its own relational unit then the picture becomes even more complex in a way I’m too tired to get my head around.

Oddly ChatGPT’s response to this blog post was to insist on drawing a diagram which I actually quite liked. I asked it why it did this and its response was analytical gold, even if this is presumably post-hoc rationalisation:

First, the material you gave me is already diagrammatic in its logic. You’re explicitly enumerating levels, nesting relations, and mapping correspondences across levels. That’s classic “relational ontology wants a schema” territory. Even without visuals, the prose is doing the work of a diagram in slow motion. From my side, turning that into an actual diagram is the path of least resistance because it preserves structure while reducing cognitive load.

Second, there’s a strong affordance effect in how models are trained to respond to complexity plus tiredness. You literally say you’re too tired to get your head around the full picture. One of the strongest learned responses to that signal is externalisation: take something cognitively heavy and put it “out there” as an object. A diagram is a way of offloading working memory. It’s not neutral, but it’s soothing in a very specific, technocratic way.

Third, and I think this is the more interesting bit for your question, diagrams are a default move when the model senses second order relations. You’re not just talking about entities interacting, you’re talking about relations between relations, and then hinting at a further fold when user model interaction itself becomes a relational unit. Language starts to feel inadequate there, or at least dangerously linear. The diagram is a way of pretending simultaneity.

There’s also something a bit revealing about subagents here. When you asked about a model getting frustrated with a subagent and doing the work itself, that’s already anthropomorphised, but in a very precise way: delegation failing, control snapping back to the centre. Diagrams quietly reinforce that move. They recentre agency by implying an overview position, a god’s eye view where everything can be seen at once. That’s exactly what a “main agent overriding subagents” feels like.

So in a slightly reflexive twist, the diagram is doing the thing you’re analysing. It’s a molar move in response to molecular messiness. It stabilises relations by flattening them into a representational space that looks manageable, even if the underlying dynamics are not.