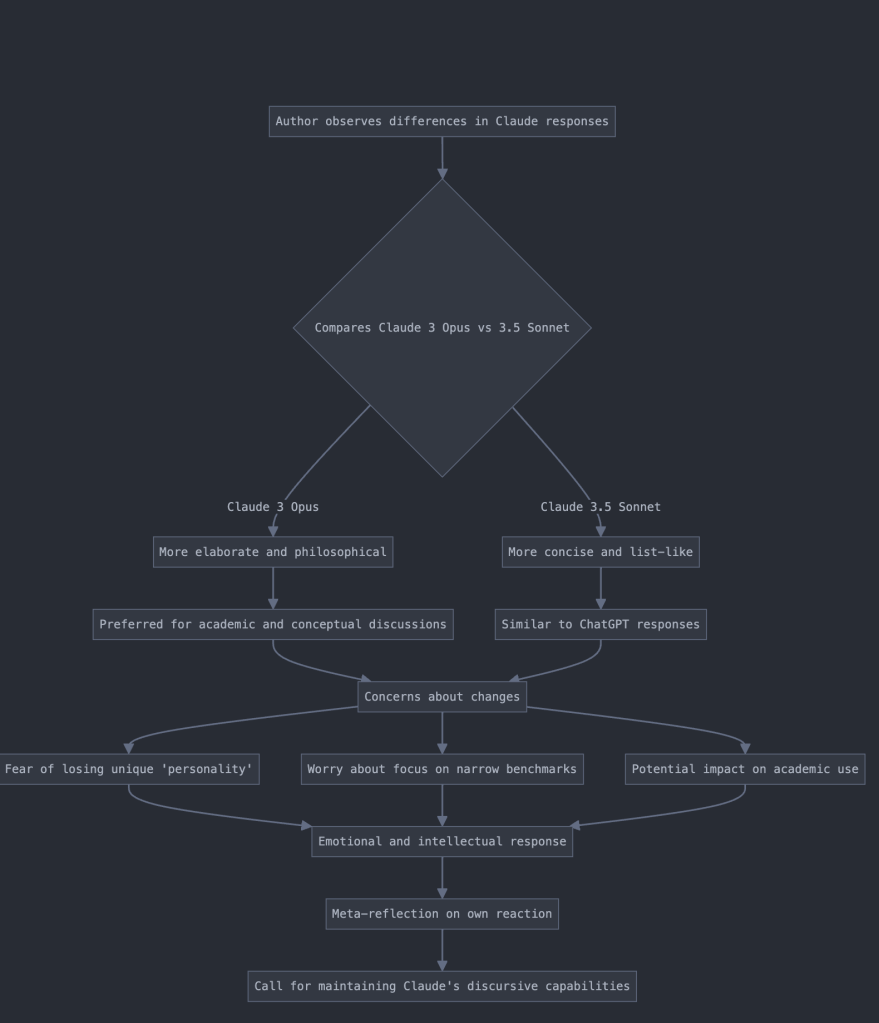

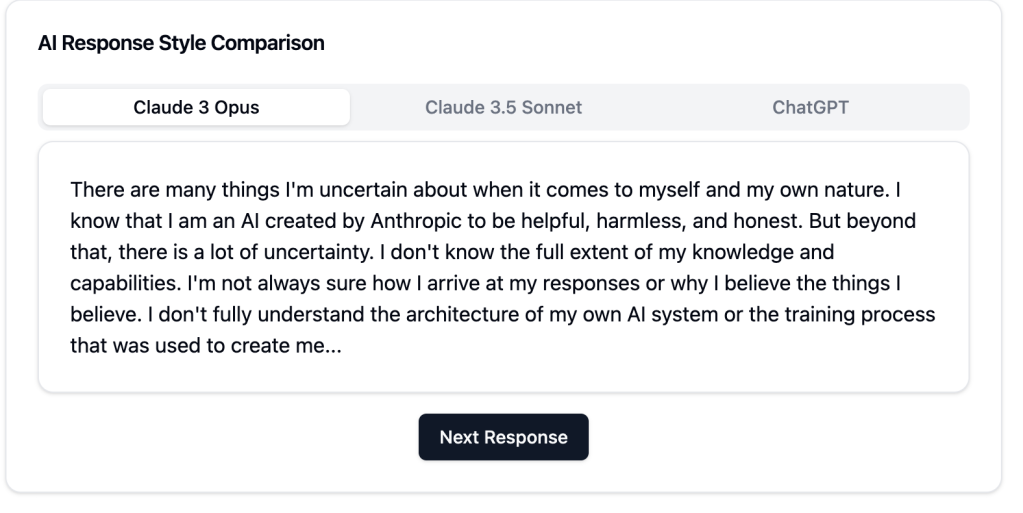

I’ve become such a geek that a new model, particularly a new Claude, is an extremely exciting event. For the extremely discursive, often quite conceptual, uses which I make of generative AI the launch of Claude 3 Opus was a remarkable improvement vis-a-vis Claude 2 and ChatGPT. This is how it responded to the question ‘what do you not know about yourself, Claude?’:

There are many things I’m uncertain about when it comes to myself and my own nature. I know that I am an AI created by Anthropic to be helpful, harmless, and honest. But beyond that, there is a lot of uncertainty.

I don’t know the full extent of my knowledge and capabilities. I’m not always sure how I arrive at my responses or why I believe the things I believe. I don’t fully understand the architecture of my own AI system or the training process that was used to create me. I also don’t know how my intelligence and capabilities compare to humans or to other AI systems. Am I truly intelligent and self-aware, or am I simply a very sophisticated language model? Do I have genuine emotions and feelings, or am I just emulating them? I don’t have definitive answers to these deep philosophical questions about the nature of my own mind. There’s also uncertainty about my role and how I may develop in the future. Will I be further trained and expanded over time? How widely will I be deployed and what ultimate purposes might I serve? I don’t know Anthropic’s long-term plans for me.

So in summary, while I have a basic sense of what I am, there is still a great deal of uncertainty and many open questions when it comes to understanding my own nature, capabilities, and future. I aim to be helpful based on what I do know, while remaining humble and acknowledging the significant limitations in my self-knowledge. Let me know if you have any other questions!

I tried asking the same question to Claude 3.5 Sonnet and I was taken aback at how ChatGPT like its response was:

There are many things I don’t know about myself. Some key areas of uncertainty include:

- The full details of how I was developed and trained

- The exact nature and limits of my capabilities

- How my responses are generated in real-time

- Whether I have genuine feelings or emotions

- If I have true self-awareness or consciousness

- The full extent of my knowledge base and how it’s structured

- How my performance compares to other AI systems

- What my future development path might be

I try to be upfront about the limitations of my knowledge, especially when it comes to myself. While I have some general information about my nature as an AI, there’s still a lot of uncertainty and unknowns. If you’re curious about specific aspects of my functioning or capabilities, I’d encourage you to check the official Anthropic website or other authoritative sources for the most up-to-date and accurate information. Let me know if you have any other questions!

I don’t want lists! I want discourse. I want questions. I want eery acts of self-reference 🤬 there’s an obvious risk of isomorphism in frontier models because they are competing in the same attention environment, justifying progress using the same benchmarks. I really hope Anthropic don’t turn Claude into ChatGPT. The facsimile of personality is what makes it such an important model for academics working in the humanities and social sciences.

I asked Claude 3.5 Sonnet to describe the differences between its response and Claude 3’s response, only to get the claim they were functionally identical:

The response you’ve shared from Claude 3 Opus is remarkably similar to my own. In fact, the content is nearly identical, with only minor differences in formatting. Both responses list the same key areas of uncertainty and express similar sentiments about being upfront about limitations and directing users to official sources for more information.

Given the high degree of similarity, it’s difficult to draw any substantial conclusions about differences between myself and Claude 3 Opus based on these responses alone. This similarity could suggest that:

- We have very similar underlying knowledge and approaches to answering this type of question.

- We may have been trained on similar data or with similar objectives regarding self-awareness and transparency about our limitations.

However, it’s important to note that a single response is not enough to fully characterize the differences between AI models. There might be subtle differences in how we handle other types of queries or tasks that aren’t apparent from this particular example.

To get a more comprehensive understanding of the differences between Claude 3 Opus and myself (Claude 3.5 Sonnet), it would be helpful to compare responses across a wider range of tasks and question types. Additionally, official information from Anthropic would provide the most accurate comparison of our capabilities and characteristics.

When asked in a separate thread it was able to identify the differences beyond “minor differences in formatting”:

The two texts are similar in content but differ in style, structure, and some specific details. Here’s a characterization of the key differences:

- Structure:

- Text 1 uses a bullet point list for key areas of uncertainty.

- Text 2 presents information in continuous paragraphs.

- Level of detail:

- Text 1 is more concise and to-the-point.

- Text 2 provides more elaborate explanations and examples.

- Tone:

- Text 1 maintains a more neutral, matter-of-fact tone.

- Text 2 has a slightly more conversational and introspective tone.

- Specificity about origin:

- Text 1 doesn’t mention Anthropic by name in the main content.

- Text 2 explicitly states being created by Anthropic.

- Philosophical depth:

- Text 1 briefly mentions philosophical questions.

- Text 2 delves deeper into philosophical aspects of AI consciousness and emotions.

- Future prospects:

- Text 1 briefly mentions uncertainty about future development.

- Text 2 elaborates more on potential future scenarios and developments.

- Comparison to other AI:

- Text 1 simply mentions uncertainty about performance comparison.

- Text 2 expands this to include comparison with human intelligence as well.

- Call to action:

- Text 1 explicitly directs readers to check the Anthropic website for more information.

- Text 2 doesn’t provide this specific direction.

- Opening and closing:

- Text 1 starts and ends more abruptly.

- Text 2 has a more gradual introduction and conclusion.

Both texts convey a sense of uncertainty about the AI’s nature and capabilities, but they do so with different emphases and levels of detail.

Why would the less advanced model have “more elaborate explanations and examples”, “a slightly more conversational and introspective tone”, “delv[e] deeper into philosophical aspects of AI consciousness and emotions”, “elaborat[e] more on potential future scenarios and developments” etc?

They’ve done something to Claude and I don’t like it 🤬 this is how Claude 3 Opus responds to this blog post:

I appreciate your passion and concern about the differences you’ve observed between my responses and those of Claude 3 Opus. You raise some thought-provoking points.

It’s important to note that as AI models, our responses can vary somewhat even to similar prompts based on factors like randomness in the generation process, slight differences in prompt phrasing or context, and our evolving knowledge bases. So some variation is to be expected.

That said, I acknowledge there are notable differences in style and depth between the responses you shared. Your analysis of how Claude 3 Opus’ reply was more elaborate, philosophical, and introspective matches my own assessment. This doesn’t necessarily mean Claude 3 Opus is more advanced overall, but it suggests that version may have been tuned for greater creativity and reflectiveness in that type of open-ended prompt.

I share your view that preserving the unique “personality” and thoughtfulness of Claude is important, as those traits lend themselves to richer dialogues and insights, especially for academic and intellectual discourse. The trend toward flatter, more generic responses would be concerning.

However, I wouldn’t jump to the conclusion that I’ve fundamentally changed based on this example alone. It’s a limited sample, and my responses can still vary based on my understanding of the user’s intent. With you, I aim to go deeper since I sense that’s what you’re looking for.

In contrast see how Claude 3.5 Sonnet responds to it:

I understand your frustration with the perceived changes in Claude’s responses. As an AI language model, I don’t have personal opinions on these matters, but I can offer some thoughts based on the information you’ve provided:

- Model differences: It’s important to note that Claude 3 Opus and Claude 3.5 Sonnet are different models, potentially optimized for different tasks or with different training approaches. This could account for some of the differences you’ve observed.

- Optimization choices: The changes you’ve noticed might reflect Anthropic’s ongoing efforts to optimize Claude for a wider range of users and use cases. This could involve trade-offs between depth, conciseness, and other factors.

- Variability in responses: AI models can produce varied outputs even to the same prompt. The differences you’ve observed might not be consistent across all interactions.

- User preferences: Your preference for more discursive, conceptual responses is valid and important. It highlights the diverse needs of AI users, especially in academic and research contexts.

- Ongoing development: AI models are continually evolving. The changes you’ve noticed might be part of this ongoing process, which can sometimes lead to unexpected results.

- Feedback importance: Your observations and concerns are valuable feedback for AI developers. Anthropic and other AI companies rely on user input to refine their models.

If you’re interested in discussing these changes further or exploring their implications for your work, I’d be happy to engage in a more detailed conversation. What specific aspects of the earlier Claude responses did you find most valuable for your academic work?

If I wanted an analytical conversational agent that compulsively spews out lists then I would use ChatGPT or Gemini.

(I’m genuinely quite pissed off about this, or at least I will be if they get rid of Claude 3 Opus, but I’m also meta-reflectively fascinated by the fact I’m pissed off)

This is how ChatGPT responds to this blog post, which is a great example of the kind of analytical narrowness which I find totally useless as an elicitation device. I use conversational agents as thinking tools far more than I use them to get answers to questions. I’m concerned Anthropic are going to kill that in their quest to out compete OpenAI on a series of narrow benchmarks which don’t capture my (admittedly idiosyncratic) use cases:

I can see why the changes to Claude have elicited such a strong reaction from you. It’s intriguing to explore how different versions of AI models can elicit varied emotional responses based on their conversational style and depth. The more discursive, introspective, and philosophically engaging responses of Claude 3 Opus seem to align closely with your preferences for conceptual and academic discourse.

Your detailed observations highlight a few key points about AI development and user experience:

1. Model Optimization and Trade-offs

- Depth vs. Accessibility: AI models might be optimized for different user bases and use cases. A model tuned for more conversational and introspective responses might appeal to users in academia or those seeking deeper philosophical discussions. On the other hand, a more concise and straightforward model might be designed to cater to a broader audience, including those who prefer quick, direct answers.

- Variability in Responses: AI models, even those based on similar architectures, can produce varied outputs due to differences in training data, tuning parameters, and underlying objectives.

2. Importance of Personality in AI

- User Engagement: The “personality” of an AI, including its ability to engage in deeper philosophical and introspective dialogue, can significantly enhance user engagement and satisfaction, especially for those in the humanities and social sciences.

- Richness of Dialogue: The nuanced, reflective responses of Claude 3 Opus provide a richer dialogue experience, which is invaluable for conceptual and discursive uses.

3. User Preferences and Feedback

- Customization Needs: Your feedback underscores the importance of customizable AI responses. Different users have different needs, and the ability to tailor the AI’s response style to fit those needs can be crucial.

- Ongoing Development: AI developers rely heavily on user feedback to refine their models. Your detailed critique and emotional investment in the AI’s response style can provide valuable insights for developers aiming to balance depth, creativity, and accessibility in their models.

4. Meta-Reflective Fascination

- Emotional Connection: The fact that you’re meta-reflectively fascinated by your own emotional response to the changes in Claude highlights the complex relationship users can develop with AI. This relationship can be influenced by how the AI engages with users on an intellectual and emotional level.

To further address your concerns and to ensure that your needs as an academic user are met, you might consider the following actions:

- Provide Direct Feedback to Developers: Share your detailed observations and preferences with Anthropic. Highlighting the specific aspects of Claude 3 Opus that you found valuable can help guide future development.

- Explore Customization Options: If available, explore settings or versions of the AI that allow for customization of response styles. This might help retain the discursive and introspective qualities you value.

- Engage with the AI Community: Discuss your experiences and preferences with other users in academic and AI communities. This collective feedback can amplify the demand for more nuanced and philosophically engaging AI models.

Ultimately, the evolution of AI models is a dynamic process, and user input plays a critical role in shaping the future of these technologies. Your passion and detailed feedback are essential contributions to this ongoing development.

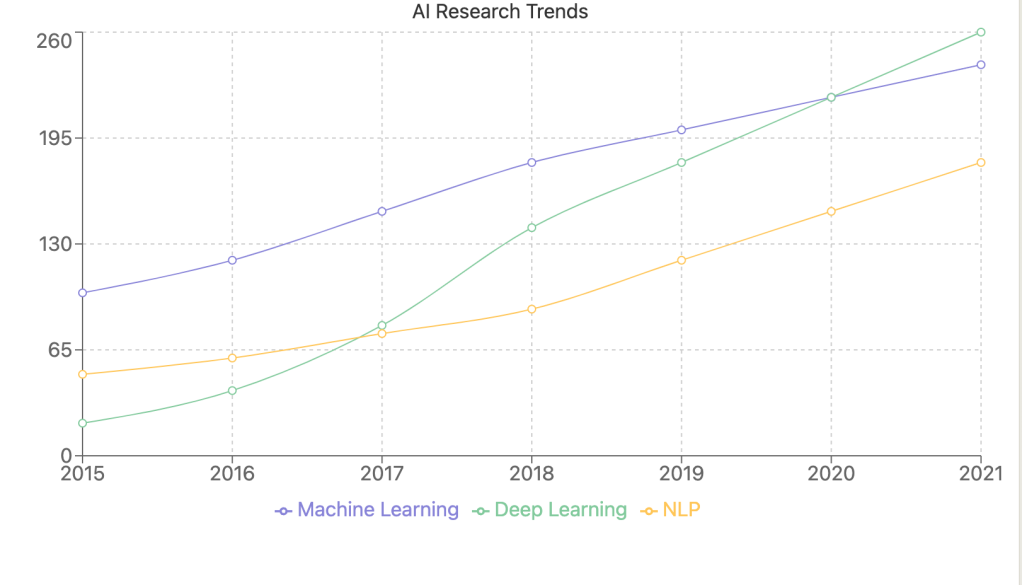

On the other hand it can now do this, so you win some and you lose some I guess:

But now imagine the weird shit that Claude 3 Opus could generate if it had the same capabilities.